In trying to keep up with the never-ending deluge of news about the forthcoming AI takeover of human society, a talk at a recent conference made me pause. For a moment, instead of having to consider whether the intelligent robots would liberate us or kill us (it’s only ever one of those two options, I find), I took a moment to worry about the machines themselves.

The conference, a meeting of computer scientists and neuroscientists at New York University, was called “Canonical Computation in Brains and Machines.” Zachary Mainen, a neuroscientist at the Champalimaud Centre for the Unknown in Lisbon, came on stage to talk about depression and hallucinations”¦ in machines.

He admitted right at the start of this talk that it all might seem “a bit odd” to consider. But he had a sound rationale. “I’m drawing on the field of computational psychiatry, which assumes we can learn about a patient who’s depressed or hallucinating from studying AI algorithms like reinforcement learning,” he told Science magazine after the lecture. “If you reverse the arrow, why wouldn’t an AI be subject to the sort of things that go wrong with patients?”

If you want to know about the mechanisms for such moods in machine brains, things get more abstract. “Depression and hallucinations appear to depend on a chemical in the brain called serotonin,” Dr. Mainen told Science. “It may be that serotonin is just a biological quirk. But if serotonin is helping solve a more general problem for intelligent systems, then machines might implement a similar function, and if serotonin goes wrong in humans, the equivalent in a machine could also go wrong.”

There are a lot of assumptions here, but it really made me think. We are steeped in stories about AI that will take over large parts of human endeavor, from automation that will threaten jobs to algorithms that could decide on medical care or help judges to sentence people in courts. In the black-or-white world of new technology, we build up an impression of the future from snippets of these extreme scenarios. But in virtually all the snippets we hear, the AI is a cold algorithm. However powerful, however “intelligent” these machines are, they are just code–and they all have off switches.

But what if an intelligent car got upset if it ran a red light or depressed if it hit a pedestrian? How would you conduct a war with an intelligent drone that felt remorse when it bombed innocent civilians? Would you feel OK about sending a bomb disposal robot out into the field, knowing that its code somehow “felt” fear?

Emotional robots aren’t a radical departure from the story world we all know–C3PO is anxious and unadventurous, while R2D2 is brave and fearless. Having been given the brain the size of a planet, Marvin is paranoid. Hal 9000 gets scared as it is switched off by Dave Bowman. The replicants of Blade Runner feel come combination of anger, hatred, and fear. We enjoy the stories of those emotional AIs precisely because the feelings help us understand them.

There are plenty of AIs inside robots today that have been programmed to display emotions–some modern “emotional” robots work by aping the facial and bodily movements of humans when they are sad, happy or angry and then let the human mind fill in the blanks. If a robot is is curled up in a ball, hiding its face and shaking, we might interpret that as “fear” and then our own emotions would allow us to treat the machine accordingly.

Octavia, a humanoid robot, is designed to fight fires on U.S. Navy ships. “She” can display an impressive array of facial expressions, look confused by tilting her head to one side or seem alarmed by lifting up both of her eyebrows. Huge amounts of sensory input help Octavia gauge the world around her and she can respond accordingly. But you’re unlikely to think that she is actually feeling any of the emotions she displays.

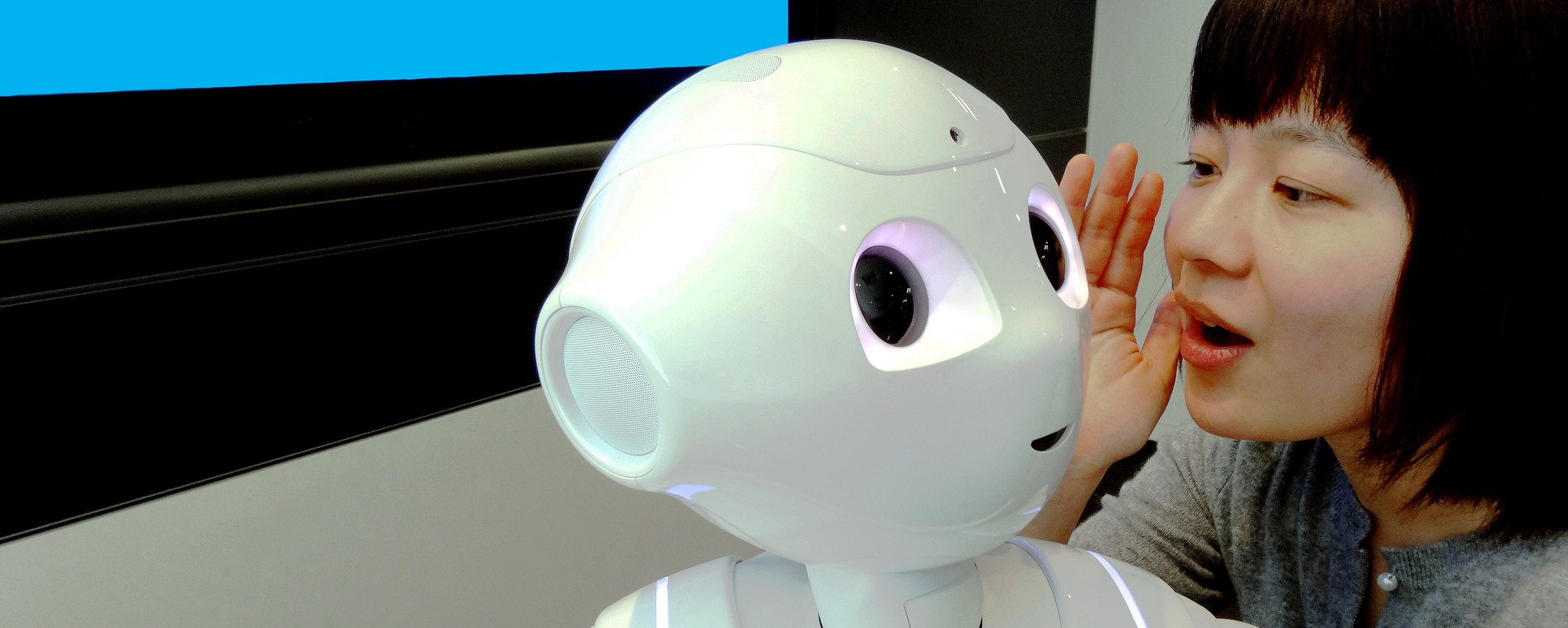

The Japanese robot Pepper has an “endocrine-type multi-layer neural network” to help it display emotions on a screen on its chest. Pepper sighs when unhappy and can also “‘feel’ joy, surprise, anger, doubt and sadness.”

But what if, as Dr Mainen suggests, dark moods like depression or strange visions and hallucination are not programmed into AIs but just emerge from other complex processes going on in the code? If you don’t know where these “feelings” are coming from in the AI brain (and if this then becomes yet another “black box” at the heart of complex AIs) would you feel safe in the presence of that machine? And would a depressed AI need counselling and time off from its job? And what about our responsibility to give the AI treatments to make it “feel” better? I don’t have a clue how to think about this, partly because it’s hard to get a handle on exactly what these new, artificial minds will be able to feel. Let’s hope we take this part of the AI future as seriously as all the rest.

How We Get To Next was a magazine that explored the future of science, technology, and culture from 2014 to 2019. This article is part of our Robots vs Animals section, which examines human attempts to build machines better than nature’s. Click the logo to read more.