“Music is about human beings communicating with other human beings,” said Andrew Dubber, professor of music industry innovation at Birmingham City University and director at Music Tech Fest.

Live music has existed for as long as humans have been communicating–that is, since the dawn of man. Here’s a quick history.

100,000 years ago: First prehistoric performances. Humans “perform” by mimicking sounds in nature, meteorological phenomena, or animal calls.

40,000 years ago: The first musical instrument is made of animal bones. The earliest-known flutes are thought to have been used for “recreation or religious purposes,” experts say.

8th century B.C.””6th century A.D.: Ancient musical performances. In ancient Greek and Roman societies music performance becomes ubiquitous at marriages, funerals, other religious ceremonies, and within theatre. Persian and Indian classical music is used in comparable fashion.

Middle Ages: Churches become the main music venues in the Western world. Pipe organs are installed in big cathedrals with natural acoustics, adding a spiritual and imposing character to the music.

Baroque Era: Multiple-sized music venues. Composers such as Bach do a lot of their playing in churches smaller than a Gothic cathedral. In the late 1700s, Mozart performs his compositions at events in grand, but not gigantic, rooms. People now dance to the music, too.

1700s: Opera emerges as a new form of entertainment. Big music halls, such as the still very popular La Scala (1778) in Milan, are constructed. Musical ensembles–by then called orchestras–grow gradually throughout the 18th and 19th centuries.

1870s: The microphone debuts. David Edward Hughes invents the carbon microphone (also developed by Berliner and Edison), a transducer that converts sound to an electrical audio signal for the first time.

Early 1900s: Jazz develops alongside orchestral music. Originally played and danced to in smoky bars and public houses, jazz paves the way for the modern concert as we now understand it.

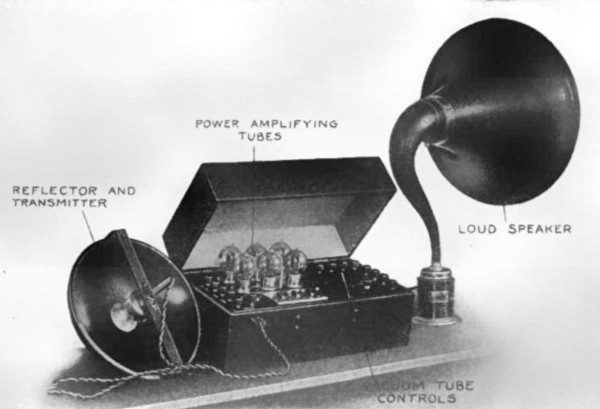

1910s: The PA is born. Magnavox‘s Edwin Jensen and Peter Pridham begin experimenting with sound reproduction using a carbon microphone; soon afterward they file the first patent for a moving-coil loudspeaker. With their “Sound Magnifying Phonograph,” the modern public address system (PA) is born–a device we still use today at nearly every live concert.

Early 1930s: The first electric amplifiers for single instruments appear. The introduction of electrolytic capacitors and rectifier tubes make it possible to produce economic, built-in power supplies that can plug into a wall socket.

1931: The “Frying Pan” guitar goes electric. Built by George Beauchamp and Adolph Rickenbacker of Electro String (later Rickenbacker), the amplified lap steel Hawaiian guitar becomes the first electric-stringed instrument. Legendary models by Leo Fender and Gibson’s Les Paul follow suit.

1941: Rickenbacker sells the first line of guitar combo amplifiers. Although rather tame by today’s standards, the amplifiers are capable of making big, unprecedented noise, and became hugely popular and influential.

1950s: Rock ‘n’ Roll is born. Several groups in the United States experiment with new musical forms by fusing country, blues, and swing to produce the earliest examples of what becomes known as “rock and roll.” The rock concert grows into an entertainment standard around the world.

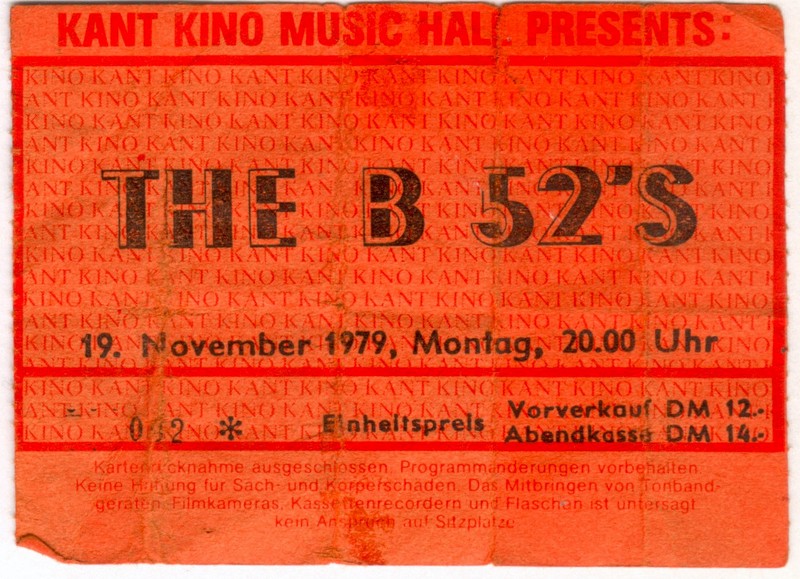

1960s: The modern concert format emerges. American promoter Bill Graham develops the format for pop music concerts. He introduces advance ticketing (and later online tickets), modern security measures, and hygiene standards.

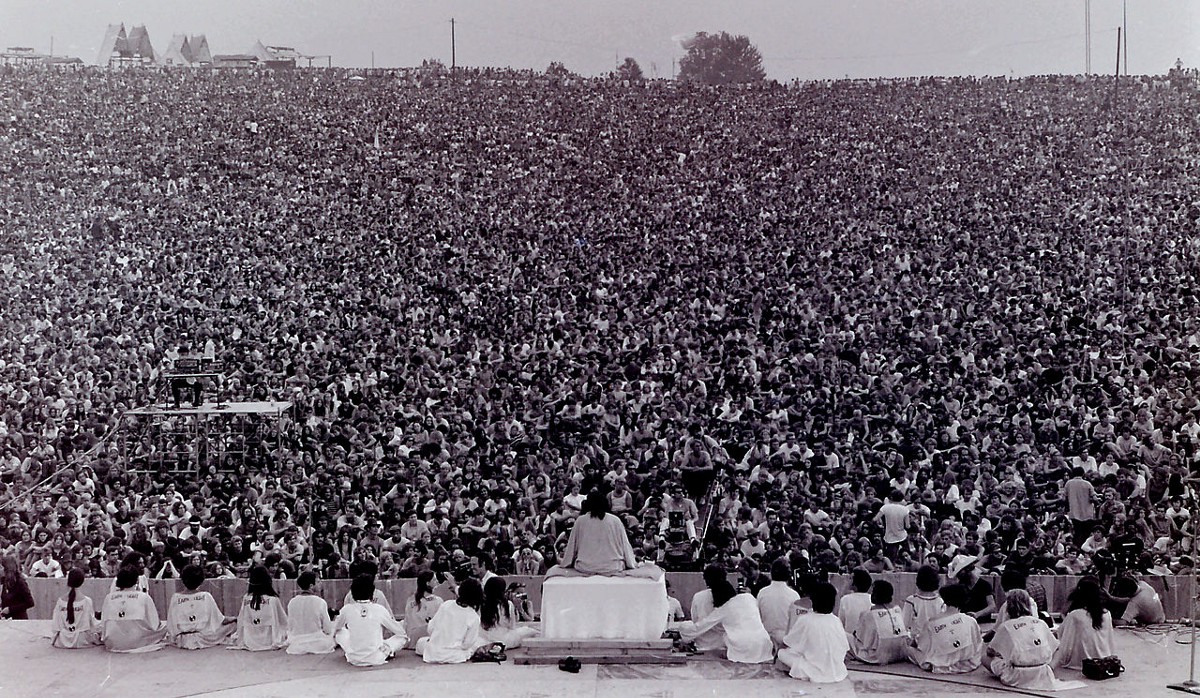

1960s””1970s: Live music exerts a major influence on popular culture. Large-scale amplification facilitates the expansion of massive music festivals–the prime example being 1969’s Woodstock Festival, attended by over 400,000 people.

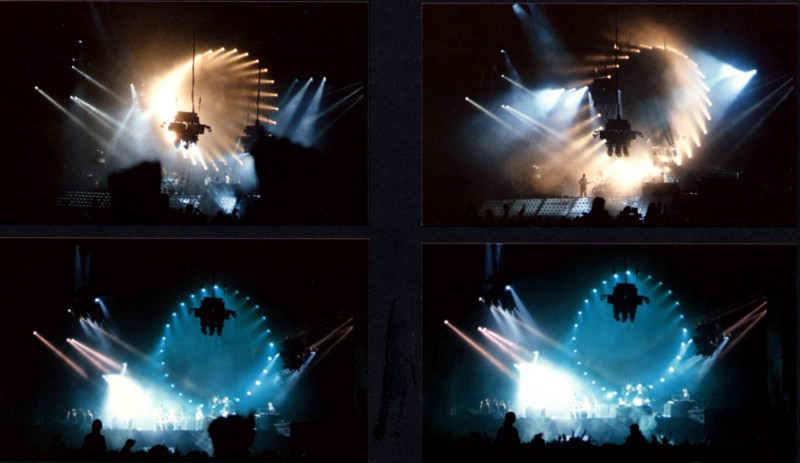

1970s: Pink Floyd pioneers concert visuals and special effects. The British rockers incorporate huge screens, strobe lights, pyrotechnics (exploding flash pots and fireworks), and special effects (from helium balloons to a massive artificial wall). The band also uses quadrophonic speaker systems and synthesizers.

The Gig of the Future

Since the 1970s, the basic format and technology behind the rock concert have remained unchanged. Sure, sound systems have gotten louder, and light shows now incorporate 3D projections and massive holograms–with DJ Eric Prydz’s hologram at his 2014 Madison Square Garden concert the largest to date–but no matter the genre, the setup is the same.

So, will the concert of the future look any different at all? At the rate technology’s changing–yes. Since the 1980s, the MIDI protocol has allowed for triggering computer sounds via MIDI controllers that resemble traditional keyboards, guitars, mixers, etc.

Today, musicians can perform in completely new ways, further unbound by the constraints of conventional instruments. Professor Dubber points us to the direction of British sound designer Ross Flight who uses Microsoft’s Kinect motion sensing device to perform live by moving in space. Choreographer Laura Kriefman creates music by dancing; producer Tim Exile invented his own electronic instruments so as to perform “exactly how he wanted”; and beatboxer Beardyman built a real-time music production machine that “doesn’t have any onboard sounds” to allow him to create loops and layers from just the sounds he makes with his voice.

Interactivity

Interaction between fans and performers is becoming more intricate–as is interaction between members of the audience themselves.

Social media is an obvious facilitator here. Snapchat’s “Our Story” feature for live events creates a compilation of shared “snaps” within certain physical spaces, uniting people while broadcasting their collective experiences to the rest of the world.

U.S. event technologies company Cantora is developing an as-yet-unnamed software that “takes the ebbs and flows” of an audience to alter the physical venue, while London technology company XOX has developed a wristband that claims to track audience emotions by “evaluating the electrical characteristics of a wearer’s skin in real time and processes this to identify changes.”

Beyond social and software, innovation is happening at the artist level as well. British songwriter and composer Imogen Heap has relied on technology extensively to interact and collaborate with her fans. In 2009, she used Vokle, an online auditorium to hold open cello auditions for her North American tour. Later, she opened the virtual auditions to singers and choirs, inviting them to submit videos on YouTube. The winners ended up with her on stage.

Wearable technology

Heap is also a pioneer in the use of wearable technology in live music performance. She has been working for years toward a more flexible live setup that would enable her mobility while performing multiple musical production tasks on the fly.

In 2011, she unveiled a pair of high-tech gloves that allow her to amplify, record, and loop acoustic instruments and her voice; play virtual instruments; and manipulate these sounds live.

The MiMu gloves combine ground-breaking technology with sensors and microphones. Heap used them to record and later perform live “Me the Machine,” the first song she created solely with the gloves.

“The thing that has irritated me over the years is the lack of flexibility in music remote controllers,” Heap told me in an interview. “Technologically, I’ve been trying to free up the performer and enable them to control every kind of nuance and detail (e.g., bending a note or moving through different octaves very quickly with your hand), like playing through stars or playing a baseline like a basketball instead of having to be very rigid.”

Pianos and guitars are amazing instruments but their use is predetermined. “Often when you’re producing, especially electronic music, you’re using sounds which don’t have a body or a physical presence. It’s about bringing those digital sounds to life on the move, wirelessly, on the stage,” she said.

Alongside performance tools, wearable technology has infiltrated the audience experience, too. Here’s a few examples:

- Smart earbuds, like those developed by San Francisco’s Doppler Labs, let users customize live music via a smartphone app that enables them to adjust the volume, EQ, and apply effects to the sounds of the environment around them.

- Nada, a wearable smart ticket combining a cashless payment method and paperless tickets, captures real-world attendee behavior at large scale.

- The Basslet, a wristband developed in Germany, lets listeners take the live gig experience anywhere by connecting their music players and making them “feel the bass and depth of music through their body.”

Ticketing

Paperless tickets are slowly replacing traditional ones. Una, a British startup, is on a mission to eradicate scalping by providing users with a plastic membership card with embedded chips to be scanned at venues. The card works in conjunction with an online account and can also be used for cashless payments.

The first cash-free festivals held in the United States (Mysteryland), Canada (Digital Dreams), and the Czech Republic (Majales) took place in 2014 with the help of smart-ticketing pioneer Intellitix.

Meanwhile, a subscription-based service has been making waves in the ticketing world. Jukely is essentially a Spotify for live gigs–you can pay $25 per month and attend as many concerts as you like from the roster the company offers.

Live streaming

A now-broken boundary, it was once a requirement that you be physically present at a concert to experience it. Live streaming has since enabled people from all over the world to gather into a single online “room.”

Artists like U2 have used applications like Meerkat and Periscope to broadcast their concerts to fans at home, and the activity has only grown in popularity since Coachella Festival streamed on YouTube in 2011.

Now apps like Stageit, through which audience members can contribute additional cash amounts to the artist performing, and Huzza, which allows for tipping or buying merchandise, offer performers expanded solutions for monetizing live streaming.

Virtual reality

The idea of a concert in virtual reality isn’t new. Several South Korean record labels introduced V-concerts as early as 1998–with varying rates of success.

Then, rather unexpectedly, English singer-songwriter Richard Hawley played a gig on Second Life in 2007.

More recently, Spotify CEO Daniel Ek said that the company plans to roll out a virtual reality concert system that will enable fans to enjoy live concerts from the comfort of their living rooms. Ek hinted at virtual merchandise booths linked to the users’ Apple Pay accounts and joked that a virtual bouncer may also be included.

Artist payments and Mycelia

With profit margins for newer artists and concert promoters notoriously low, and many festivals shuttering their doors over the past few years, the live concert market is an unpredictable one.

The challenge of creating a sustainable and equitable artist remuneration system for the gig of the future is crucial.

Here, Imogen Heap might have the answer again. In October, she released her single “Tiny Human” on Mycelia. Mycelia is a revolutionary transaction system using the Blockchain–the architecture that underpins the popular electronic currency Bitcoin–that has the potential to transform how live musicians are paid.

Mycelia brings together artists, developers, and coders in a formal movement to shape the direction of the music industry in favor of the artist.

“With live for instance, if somebody covered one of my songs during a big show, sometimes it happens that that song doesn’t get registered properly and as a result [I] wouldn’t receive any royalties from the song’s performance,” said Heap.

“But there are devices–little gizmos sitting in a corner of the club–that find algorithmically who the songwriter of any performed song is and help calculate the amount of payment due–according to venue and audience size,” she explained.

“At the moment, any transaction would go through different collecting societies. In the future, it would just come directly to me as a songwriter. It’s becoming possible to break down the barriers of where institutions have previously got in the way of the flow of information and the flow of creativity. I’m excited about this, more than ever,” she said.

How We Get To Next was a magazine that explored the future of science, technology, and culture from 2014 to 2019. This article is part of our Fast Forward section, which examines the relationship between music and innovation. Click the logo to read more.